Oracle Data Guard ensures high availability, data protection, and disaster recovery for enterprise data. Data Guard provides a comprehensive set of services that create, maintain, manage, and monitor one or more standby databases to enable production Oracle databases to survive disasters and data corruptions.

Data Guard can switch any standby database to the production role as and when required, minimizing the downtime associated with outage on primary database. Data Guard can be used with traditional backup, restoration, and cluster techniques to provide a high level of data protection and data availability.

In below post we will create a Physical Data Guard setup for testing purpose.

Below are the basic steps that we are going to follow to complete our Data Guard 11g setup:

ASSUMPTIONS

PART 1: UNDERSTANDING THE CONFIGURATION OPTIONS

1.1) CHOOSING PROTECTION MODES

1.2) CHOOSING REDO TRANSPORT

1.3) CHOOSING APPLY METHODS

1.4) CHOOSING ROLE TRANSITIONS

PART 2: CONFIGURING AND TUNING THE NETWORK

2.1) BANDWIDTH

2.2) ORACLE NET SERVICES SESSION DATA UNIT (SDU) SIZE

2.3) TCP TUNING

2.4) NETWORK DEVICE QUEUE SIZES

2.5) SRL FILES’ I/O TUNING

PART 3: CHOOSING THE TOOL TO CREATE DATA GUARD

PART 4: INSTALLING ORACLE DATABASE SOFTWARE FOR STANDBY

PART 5: CREATE PHYSICAL STANDBY DATABASE USING MANUAL METHOD

5.1) DATAGUARD RELATED PARAMETER SETUP

5.2) PREPARE THE STANDBY SYSTEM

5.3) PREPARE THE PRIMARY SYSTEM

5.4) FINALLY CREATE THE STANDBY DATABASE!

5.5) MODIFY THE REQIRED INIT PARAMETERS

5.6) VERIFY

ASSUMPTIONS:

Operating System : Oracle Enterprise Linux 6

Primary database is already existing and is in archivelog mode.

Primary Database Oracle SID: brij

Primary Database Oracle Home: /u01/oracle/DB11G/product/11.2.0/dbhome_1

Primary Database Version: 11.2.0.4

Standby database will be created as below

Standby Database Oracle SID: stdby

Standby Database Oracle Home: /backups/oracle/stdby/product/11.2.0/dbhome_1

Standby Database Version: 11.2.0.4

For testing purpose only we will create both Primary and Standby database in the same machine

PART 1 : UNDERSTANDING THE CONFIGURATION OPTIONS

This is the most important step in setting up your data guard. So before thinking anything about installation details of Datagurad follow through below steps to formulate a robust dataguard configuration plan.

1.1) CHOOSING PROTECTION MODES

This configuration option actually guides other configuration options like how redo will be transported or what the primary database will do when a standby or the network fails.

You have the option of choosing any one of the below protection mode.

1) Maximum Performance

This is the default mode.

Here Dataguard has to take care NOT to impact the performance of primary database and allow as little data loss as possible. So you can loss ‘some’ data in this protection mode but you will get highest degree of performance for your primary database.

So, if your standby database is disconnected from the primary database (network, system, or standby database failure issues etc), the primary database is not affected at all, which means redo generation at primary will not be paused at all. Also in this mode it doesn’t matter how far apart your primary and standby databases are.

The Maximum Performance protection mode is useful for applications that can tolerate some data loss in the event of a loss of the primary database.

Requirements for Maximum Performance mode:

> asynchronous transport (ASYNC) (mandatory)

> no affirmation of the standby I/O (NOAFFIRM). (mandatory)

> Standby Redo Log (SRL) Files (not mandatory but recommended)

Tips on Standby Redo Log Files

- SRL files must be the same size as your online redo log (ORL) files

- you need to have the same number of SRL files as you do ORL files, plus one

- SRL files need to be created on your standby as well as on your primary in preparation for switchover.

2) Maximum Availability

Here Data Guard has to take care to NOT allow any data loss without impacting the availability of the primary database. So standby will always remain in sync with primary database and at failover situation a synchronized standby database will result in zero data loss.

So if our applications cannot tolerate data loss in the event of a loss of the primary database and also we don’t want any downtime due to standby and/or network failures then Maximum Availability protection mode is useful.

CAVEAT:

There can be some situation where you can lose data in Maximum Availability mode:

Remember that SYNC of standby database depends on

a) Network b/w primary and standby

b) standby database itself.

If the network went down first or the standby went down and didn’t have a chance to resynchronize before the failover, then all redo generated at the primary database will be lost when you failover, Which means data loss

Requirements for Maximum Availability mode:

> synchronous transport (SYNC) (mandatory)

> affirmation of the standby I/O (AFFIRM). (mandatory)

> Standby Redo Log (SRL) Files (mandatory)

So unlike Maximum Performance mode here the SRL files are mandatory.

The difference between SYNC and ASYNC redo transport method is that in the SYNC mode LGWR process at primary databse will wait (maximum wait=NET_TIMEOUT value, default 30 SEC) for an acknowledgement from stand by database that redo sent to standby was received and recorded.

So looking at the difference in the way the SYNC redo transport method works, our success in configuring Maximum Availability mode really depends on some important factors like:

A) network bandwidth

B) network is tuned or not

C) distance between primary and secondary

D) redo generation rate at primary

3) Maximum Protection

Here Data Guard has to take care to NOT allow any data loss without any caveat (as discussed above in Maximum Availability mode) and if required primary database can be taken down to save it from any data-loss situation.

Requirements for Maximum Protection mode:

> synchronous transport (SYNC) (mandatory)

> affirmation of the standby I/O (AFFIRM). (mandatory)

> Standby Redo Log (SRL) Files (mandatory)

So you see the requirements for Maximum Protection and Maximum Availability mode are same.

Remember that a minimum of one standby database destination is required for primary database to run in Maximum Protection mode while in both Maximum Availability and Maximum Performance mode primary database can run even if no standby database is present.

Difference between Maximum Protection and Maximum Availability mode: In the SYNC method in Maximum Protection mode, LGWR process at primary databse will wait (maximum wait=NET_TIMEOUT value, default 30 SEC) for an acknowledgement from stand by database that redo sent to standby was received and recorded. If no response is received within NET_TIMEOUT value, the standby database is marked as failed and the LGWR continues committing transactions, ignoring the failed standby database as long as at least one synchronized standby database meets the requirements of Maximum Protection. And if the unreachable standby is the last remaining synchronized standby database, the primary database will abort. Before aborting the primary databse LGWR process will stall primary database and will try a reconnect to standby about 20 times and if it fails all the times, it will abort the primary database. In Maximum availability mode, data guard process will never abort primary database

So basically, the best way to use Maximum Protection mode is to create at least two standby databases that meet the requirements for the Maximum Protection mode. So that when one of them becomes unreachable, the primary database will continue generating redo without a pause of more than NET_TIMEOUT seconds. Meantime you can correct the errors in failed standby database.

So, below is how these three protection mode can be marked:

Low impact to primary Low impact to primary High impact to primary

High risk to data Medium risk to data Low risk to data

————————————————————————————————————————————————–>

Maximum Performance Maximum Availability Maximum Protection

Here in our setup we will first configure our dataguard setup to run Maximum Performance mode and later on will convert it to Maximum Availability and Maximum Protection for testing purpose.

1.2) CHOOSING REDO TRANSPORT

Now since you already chosen a the protiction mode for your data guard setup, next you need to choose how the redo generated at primary database will be transferred to standby database for applying.

For the Maximum Performance mode(ASYNC and NOAFFIRM) , the LOG_ARCHIVE_DEST_n parameter will look like below:

|

1

|

LOG_ARCHIVE_DEST_2=‘SERVICE=stdby ASYNC NOAFFIRM’

|

And for Maximum Availability or Maximum Protection mode (SYNC and AFFIRM) , the parameter will look like:

|

1

|

LOG_ARCHIVE_DEST_2=‘SERVICE=stdby SYNC AFFIRM’

|

Where:

stdby is our standby database

It is very important to configure and tune the network so that Data Guard can work successfully without long waits for redo to get transferred. Check “PART 2 : CONFIGURING AND TUNING THE NETWORK”.

1.3) CHOOSING APPLY METHODS

The apply method is the type of standby database you choose to set up—a physical standby database using Redo Apply or a logical standby database using SQL Apply.

What apply method we are going to use depends on:

1) redo generation rates

If redo generation rate is low, you can go for any of physical or logical standby database.

2) hardware resources

1.4) CHOOSING ROLE TRANSITIONS

switchover and failover are key terms that you should think of when creating the standby database.

PART 2 : CONFIGURING AND TUNING THE NETWORK

The key to successful working of Data Guard is ‘REDO’ and how quickly it flows in the data guard setup. You need to know how much redo your primary database will be generating at ‘peak’ times and ‘steady’ state.

The key network related parameters which have major impact on Data Guard functioning are:

2.1) BANDWIDTH

2.2) ORACLE NET SERVICES SESSION DATA UNIT (SDU) SIZE

2.3) TCP TUNING

2.4) NETWORK DEVICE QUEUE SIZES

2.5) SRL FILES’ I/O TUNING

2.1) BANDWIDTH

Bandwidth is ‘capacity’ of our network to send maximum amount of data bits at the same time. It is NOT the speed of our network so a very high bandwidth network doesn’t essentially means fastest network.

So to know how much bandwidth we will require we need to know the redo generation rate of our database.

Ways to find redo generation rate:

i) check the AWR report generated during steady state times and peak times.

ii) Look at primary database alert log, calculate the time between log switches during steady state and peak periods. Now add hom much MB of archive logs was generated for those log switches and divide this total MB by the total time to get the average megabytes of Redo generated per second.

NOTE: For RAC system you need to add up the MB from all instances to reach at final value.

Remember that you need to size the network for peak load and also you will always need ‘more’ bandwidth than your peak redo rate value. That ‘more’ bandwidth value can be 20% to 50% as per situation.

The bandwidth required is always ‘more’ than the redo generation rate as we also have to take into account the latency or round trip time (RTT) of the network. The latency can be caused by various hardware devices at each end, size of the data packet going through.

‘traceroute’ is good tool to track network latency but not a true measure as there can be various other reasons that affect our ability to ship redo.

2.2) ORACLE NET SERVICES SESSION DATA UNIT (SDU) SIZE

Oracle net send data to the network layer in units called session data unit (SDU). The default size of SDU is 8192 bytes which is not very efficient for Data Guard setup. Since large amounts of data are usually being transmitted to the standby, increasing the size of the SDU buffer can improve performance and network utilization.

You can configure high SDU globally within the sqlnet.ora file

DEFAULT_SDU_SIZE=32767

tnsnames.ora

STDBY= (DESCRIPTION= (SDU=32767) (ADDRESS=(PROTOCOL=tcp)(HOST=localhost.domain)(PORT=1521)) (CONNECT_DATA= (SERVICE_NAME= stdby)) )

Here STDBY is our standby database.

This will cause Data Guard to request 32,767 bytes for the session data unit whenever it makes a connection to the standby.

listener.ora

Here brij is our primary database.

Now the incoming connections from the standby database will also get the maximum SDU size.

Also, we will make similar changes in our standby database tnsnames.ora and listener.ora to make the standby system to use the same SDU size.

2.3) TCP TUNING

We need to prepare our TCP network layer to handle the large amounts of redo we will be sending during Data Guard processing

i) maximum TCP buffer space

It is amount of memory on the system that a single TCP connection can use.

sysctl -a can give you the current value

In our Linux system, the values are:

This maximum can prove sufficient but if necessary you need to increase this maximum limits.

ii) socket size

It is the values that a TCP connection will use for its send and receive buffers.

sysctl -a can give you the current value

Bandwidth-Delay Product (BDP) is important parameter in deciding these TCP related values.

here:

BDP = Bandwidth × Latency

It is generally seen that you need 3 times the value of BDP as you TCP socket buffer size.

So, like we did for SDU setup, we need to make these changes at Oracle Net Services level. Put this value in the tnsnames.ora and litener.ora files:

(SEND_BUF_SIZE=<value of BDP*3>)

(RECV_BUF_SIZE=<value of BDP*3>)

2.4) NETWORK DEVICE QUEUE SIZES

TCP local queue limit the number of buffers or packets that may be queued for transmit or they limit the number of receive buffers that are available for receiving packets.

When you have high rate of small-sized packets, you should tune both transmit or receive queue.

The transmit queue size is configured with the network interface option txqueuelen, and the network receive queue size is configured with the kernel parameter netdev_max_backlog.

In our system the values are:

transmit queue

txqueuelen:1000 (can be checked using ifconfig command)

for long-distance, high-bandwidth network links you can increase this value to 10000.

receive queue:

net.core.netdev_max_backlog = 1000 (can be checked using sysctl -a command)

Also if you have increased the transmit queue length, it is considered a good idea to increase the receive queue as well and usually it is increased 2 to 3 times as transmit queue.

If you change any of the queue values, you should change them in both primary and standby database servers.

2.5) SRL FILES’ I/O TUNING

RFS process at standby database uses SRL files writes the incoming redo so that it is persistent on disk for recovery.

In Maximum Availability and Maximum Protection modes as we have AFFIRM processing, so the disk write to the SRL file must occur prior to sending an acknowledgment back to the primary that the redo has been received but Maximum Performance mode has NOAFFIRM processing.

It is important that we optimize I/O of the SRL files on the standby.

Do not multiplex the SRLs as there is really no need and it will only increase the time lag.

In case of Maximum performance mode, Oracle database is configured for asynchronous I/O. We must also properly configure the operating system, host bus adapter (HBA) driver, and storage array.

DB Log buffer size and compression effects on ASYNC Redo Transport

In DB 11g, LNS read redo directly from the log buffer and if the redo to be sent is not found in the log buffer, then the LNS process will go to the ORL to retrieve it.

And in a bandwidth-strapped network LNS has to go down to archive log also sometimes.

As reading from log buffer is much faster than reading from disk (ORL), so the log buffer should be sized so that LNS is always able to find the redo that it needs to send within the log buffer. The log buffer hit ratio is tracked in the view X$LOGBUF_READHIST. A low hit ratio indicates that the LNS is frequently reading from the ORL instead of the log buffer.

The default value for log buffers is generally not that high (512KB). Increasing the log buffers improves the read speed of the LNS process.

Other important feature in 11g is redo transport compression, which is very beneficial when you have low-bandwidth. Redo transport communication can reduce both when you have low-bandwidth and redo transfer time.

There are some points to think upon before you actually use compression feature

> As compression take CPU so you should have sufficient CPU resources

> some kind of data like images can be compressed much as they are already compressed.

PART 3 : CHOOSING THE TOOL TO CREATE DATA GUARD

You can use any of the below interface to configure, manage, and use your Data Guard setup

A) SQL*Plus

B) Oracle Enterprise Manager Grid Control

C) Data Guard Broker

Grid Control uses the Data Guard Broker to set up and manage the configuration

In this guide we will use conventional tried and tested SQL*Plus method.

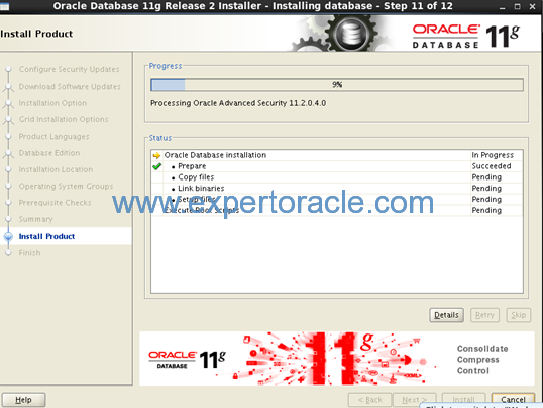

PART 4 : INSTALLING ORACLE DATABASE SOFTWARE FOR STANDBY

Set below parameters in the server where you want to create your standby database:

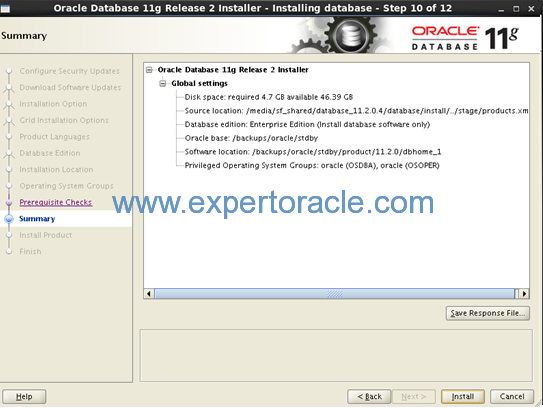

Use Oracle Database 11.2.0.4 runInstaller to start Oracle Universal Installer and install oracle database software ONLY.

i) Skip Software updates

ii) install database software only

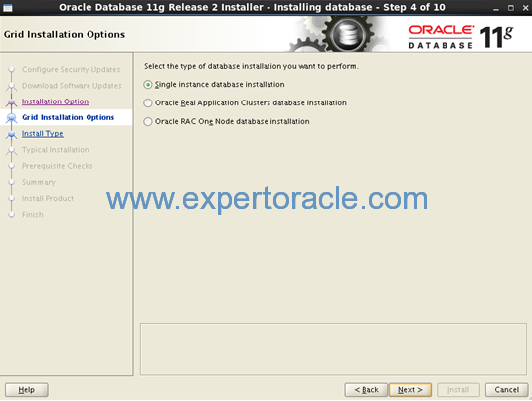

iii) Single instance database installation

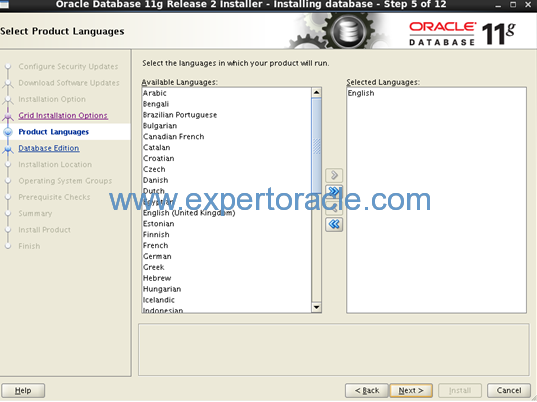

iv) select all required languages

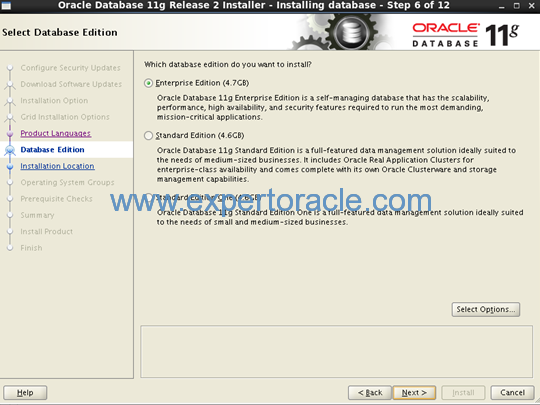

v) choose Enterprise Edition

vi) choose oracle_base and oracle_home locations

vii) choose osdba and osoper group

viii) review the configurations & click install

ix) Wait for install progress to complete

PART 5 : CREATE PHYSICAL STANDBY DATABASE USING MANUAL METHOD

RMAN is fast becoming the the best method to use to create standby database and the same will be used in our example.

5.1 DATAGUARD RELATED PARAMETER SETUP

i) PARAMETERS REQUIRED ON BOTH PRIMARY & STANDBY

a) DB_UNIQUE_NAME

This parameter defines the unique name for a database, if the parameter is not defined, it is defaulted to the DB_NAME.

Current settings in our system:

b) LOG_ARCHIVE_CONFIG

This defines the list of valid DB_UNIQUE_NAME parameters for your Data Guard configuration.

Current settings in our system:

SYS@brij > show parameter LOG_ARCHIVE_CONFIG NAME TYPE VALUE ----------------------------------------- log_archive_config string

Changes done:

SYS@brij > ALter system set log_archive_config='dg_config=(brij,stdby)' scope=both; System altered. SYS@brij > show parameter LOG_ARCHIVE_CONFIG NAME TYPE VALUE ----------------------------------------------------------- log_archive_config string dg_config=(brij,stdby)

c) LOG_ARCHIVE_MAX_PROCESSES

On the primary, one archive process is dedicated to servicing only the Online redo log files, while the others are all allowed to perform both functions

Current settings in our system:

SYS@brij > show parameter LOG_ARCHIVE_MAX_PROCESSES NAME TYPE VALUE ----------------------------------------------------- log_archive_max_processes integer 4

A value of 4 is good to start with to ensure that you do not have stalls on primary system in a time of a large gap of a slow network

d) DB_CREATE_FILE_DEST

you will need to define it at the standby database if you are using ASM

ii) PARAMETERS REQUIRED ONLY ON PRIMARY

e) LOG_ARCHIVE_DEST_n

This is the main parameter for Data Guard redo transport and we will understand it well before we proceed further with our installation.

Remember that the local archiving is defaulted to the flash recovery area and you no longer need to define a local destination. Below is the settings for the log_archive_dest_1.

This parameter requires following attributes:

1) SERVICE

It is the TNSNAMES descriptor which is pointing to standby database.

The value here is ‘STDBY’

2) SYNC or ASYNC

For Maximum Availability or Maximum Protection you must use SYNC and for Maximum Performance you use ASYNC. ASYNC is the default mode.

In our setup we will use Maximum Availability mode so we will be using ‘SYNC’

3) NET_TIMEOUT

The number of seconds that the LGWR process will wait for an LNS process to respond before abandoning the standby as failed. The default is 30 seconds

4) REOPEN

It Controls the wait time before Data Guard will allow the primary database to attempt a reconnection to a failed standby database. Its default value is 300 seconds but you can reduce it to 30 seconds depending on your requirements.

5) DB_UNIQUE_NAME

This parameter defines the unique name for a database, if the parameter is not defined, it is defaulted to the DB_NAME.

You must also set the LOG_ARCHIVE_CONFIG parameter before using this attribute in your LOG_ARCHIVE_DEST_n parameter

6)VALID_FOR

This parameters decides when LOG_ARCHIVE_DEST_n destination parameter should be used and on what type of redo log file it should operate.

Following are the possible values for log files:

> ONLINE_LOGFILE Valid only when archiving ORL files

> STANDBY_LOGFILE Valid only when archiving SRL files

> ALL_LOGFILES Valid regardless of redo log files type

Following are the possible values for roles:

> PRIMARY_ROLE Valid only when the database is running in the primary role

> STANDBY_ROLE Valid only when the database is running in the standby role

> ALL_ROLES Valid regardless of database role

Only if the answer to both of its parameters is TRUE, LOG_ARCHIVE_DEST_n destination parameter will be used

Also LOG_ARCHIVE_DEST_n can have 30 available destinations, so up to thirty standby databases.

So for our system we will set below value in our primary database:

alter system set log_archive_dest_2="service=stdby SYNC REOPEN=30 NET_TIMEOUT=30 valid_for=(ONLINE_LOGFILES,PRIMARY_ROLE) db_unique_name='stdby'" scope=both;

The following attributes are optional in LOG_ARCHIVE_DEST_n:

1) AFFIRM/NOAFFIRM

AFFIRM is default for SYNC destinations if not specified.

NOAFFIRM Default for ASYNC destinations if not specified.

2) COMPRESSION

You can turn on compression using the Advanced Compression option for standby destination. It can compress archives for gap resolution or current redo stream or both.

For redo stream, the compression is only during transport and not at disk level.

3) LOCATION

Earlier this attribute was required to specify a location where the archive processes could store the archive log files. With the flash recovery area and local archiving defaults, you no longer need to define a destination with this attribute.

4) MAX_FAILURE

It defines how many times at log switch time the LGWR will attempt to reconnect to a failed standby database and if it still is unsuccessful in reconnecting to the

standby database, it will stop trying it.

iii) PARAMETERS REQUIRED ONLY ON STANDBY

f) DB_FILE_NAME_CONVERT

This is necessary parameter if your directory structures are different between primary and standby databases. Until the standby database becomes a primary database, this

translation occurs only at the runtime, but once switchover or failover to the standby has occured, these values are hard coded into the control file and the data file headers.

For our standby system it will be:

db_file_name_convert='/u01/oracle/DB11G','/backups/oracle/stdby'

We will use this parameter when we will run RMAN duplicate command.

g) LOG_FILE_NAME_CONVERT

It is for the ORL files and any SRL files.

For our standby system it will be:

log_file_name_convert='/u01/oracle/DB11G/fast_recovery_area/BRIJ' ,'/backups/oracle/stdby/fast_recovery_area/stdby'

We will use this parameter when we will run RMAN duplicate command.

h) FAL_SERVER

FAL means Fetch Archive Log. This is also known as reactive gap resolution and is the process where a physical standby can go and fetch a missing archive log file

from one of the databases (primary or standby) in the Data Guard configuration when it finds a problem.

you define the FAL_SERVER parameter as a list to TNS names that exist on the standby server that point to the primary and any of the standby databases.

For our standby system it will be:

fal_server='brij'

We will update these parameter immediately after we are done with standby database creation.

i) FAL_CLIENT

It is the TNS name of the gap-requesting standby database that the receiver of the gap request (the FAL_SERVER) needs so that the archive process on the FAL server database can connect back to the requestor

For our standby system it will be:

fal_client='stdby'

‘stdy’ must be defined in the FAL server’s TNS names file so that Data Guard can make a connection to the standby database. since for redo transport parameters setup we already have put the ‘stdby’ value in priamry’s tnsnames so we are good.

We will update these parameter immediately after we are done with standby database creation.

j) STANDBY_FILE_MANAGEMENT

If you set this parameter to ‘AUTO’, then whenever data files are added or dropped from the primary database, the corresponding changes are automatically made on the standby database. Data Guard will execute the data definition language (DDL) on the standby to create the data file

By default, this parameter is set to ‘MANUAL’ but we will use ‘AUTO’ mode

For our standby system it will be:

standby_file_management=’AUTO’

We will update these parameter immediately after we are done with standby database creation.

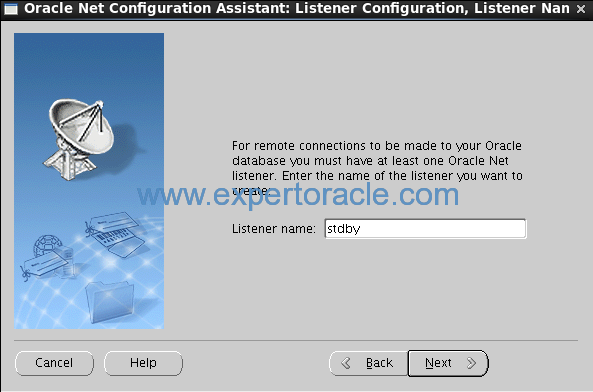

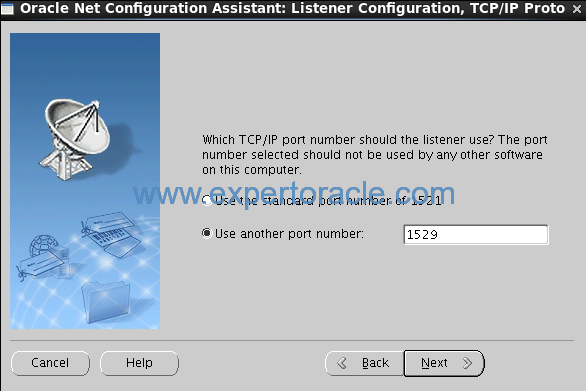

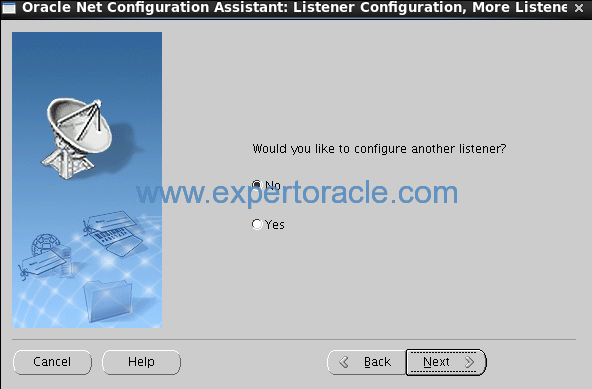

5.2) PREPARE THE STANDBY SYSTEM

5.2.1 Setup Listener and Create a static listener entry for the standby

On newly created standby system

$ cd $ORACLE_HOME/bin

$ netca

We will keep ‘stdby’ as listener name.

We will use port 1529 for Standby database.

This will complete the Listener configuration.

Now create a standard static entry in the standby listener

SID_LIST_stdby = (SID_LIST = (SID_DESC = (SDU=32767) (GLOBAL_DBNAME = stdby) (ORACLE_HOME = /backups/oracle/stdby/product/11.2.0/dbhome_2) (SID_NAME = stdby) ) )

Make sure you reload the listener after you put this in the listener file:

5.2.2 Create tnsnames.ora file and add both standby and target entries

BRIJ = (DESCRIPTION = (SDU=32767) (ADDRESS = (PROTOCOL = TCP)(HOST = oraclelinux6.localdomain)(PORT = 1521)) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = brij) ) ) stdby = (DESCRIPTION = (SDU=32767) (ADDRESS = (PROTOCOL = TCP)(HOST = oraclelinux6.localdomain)(PORT = 1529)) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = stdby) ) )

5.2.3 Create an init<sid>.ora file

As of now just put only basic parameters in it. During the standby database creation, RMAN will replace this file.

We have used only below five basic parameters.

You can only put db_name in this file and it will be sufficient too.

5.2.4 Create a password file

Create a password file with the primary database SYS password.

5.2.5 Start up the standby instance

You must start the standby database in NOMOUNT mode so RMAN process can attach to the instance.

5.2.6 Check the Listener services

At this moment check the standby listener if ti is registering well with the standby database or not.

$ lsnrctl status stdby LSNRCTL for Linux: Version 11.2.0.4.0 - Production on 20-MAR-2014 14:45:06 Copyright (c) 1991, 2013, Oracle. All rights reserved. Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=oraclelinux6.localdomain)(PORT=1529))) STATUS of the LISTENER ------------------------ Alias stdby Version TNSLSNR for Linux: Version 11.2.0.4.0 - Production Start Date 20-MAR-2014 14:44:41 Uptime 0 days 0 hr. 0 min. 25 sec Trace Level off Security ON: Local OS Authentication SNMP OFF Listener Parameter File /backups/oracle/stdby/product/11.2.0/dbhome_2/network/admin/listener.ora Listener Log File /backups/oracle/stdby/diag/tnslsnr/oraclelinux6/stdby/alert/log.xml Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=oraclelinux6.localdomain)(PORT=1529))) (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=EXTPROC1529))) Services Summary... Service "stdby" has 1 instance(s). Instance "stdby", status <strong>UNKNOWN</strong>, has 1 handler(s) for this service... The command completed successfully

5.3 PREPARE THE PRIMARY SYSTEM

By using the new RMAN functionality of Oracle Database 11g, we have saved some time as we will not be taking RMAN backup of primary database manually. This method suits us as our database size is small. If you have a really big database with TBs of data files and also have hige network load, then conventional RMAN method of backing up at primary and recovering at standby is best for you.

5.3.1 Create SRL group

Aas we will be using SRL files, so if we create them on the primary database before we create the standby, RMAN will create them for us on the standby database during standby database creation.

5.3.2 Add standby database entry to the tnsnames.ora file

Our tnsnames.ora file at the Primary database looks like below with both primary & standby entries in it.

BRIJ = (DESCRIPTION = (SDU=32767) (ADDRESS = (PROTOCOL = TCP)(HOST = oraclelinux6.localdomain)(PORT = 1521)) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = brij) ) ) stdby = (DESCRIPTION = (SDU=32767)(ADDRESS = (PROTOCOL = TCP)(HOST = oraclelinux6.localdomain)(PORT = 1529)) (CONNECT_DATA =(SERVER = DEDICATED) (SERVICE_NAME = stdby) ) )

5.3.3 Enable logging

This is the recommended Data Guard setting, as this ensures that all transactions are logged and can be recovered through media recovery or redo apply.

5.4 FINALLY CREATE THE STANDBY DATABASE!

We are using here Active duplicate feature of 11g database. Oracle 11g introduced active database duplication using which we can create a clone database of the target database without taking any manual backups. Active database duplication copies the target database over the network to the destination and then creates the duplicate database. Only difference is you don’t need to have the pre-existing RMAN backups and copies. The duplication work is performed by an auxiliary channel.

Below is the work log of the above RMAN command:

$ rman

Recovery Manager: Release 11.2.0.4.0 - Production on Thu Mar 20 15:50:43 2014

Copyright (c) 1982, 2011, Oracle and/or its affiliates. All rights reserved.

RMAN> @connect.rman

RMAN> CONNECT TARGET *

connected to target database: BRIJ (DBID=1279650270)

RMAN> CONNECT AUXILIARY *

connected to auxiliary database: STDBY (not mounted)

RMAN> **end-of-file**

RMAN> @stdby.rman

RMAN> run {

2> allocate channel c1 type disk;

3> allocate channel c2 type disk;

4> allocate channel c3 type disk;

5> allocate auxiliary channel stby1 type disk;

6> duplicate target database for standby from active database

7> spfile

8> parameter_value_convert 'brij','stdby'

9> set 'db_unique_name'='stdby'

10> set control_files='/backups/oracle/stdby/oradata/control.ctl'

11> set db_create_file_dest='/backups/oracle/stdby/oradata'

12> set audit_file_dest='/backups/oracle/stdby/admin/stdby/adump'

13> set db_create_online_log_dest_1='/backups/oracle/stdby/fast_recovery_area/stdby/onlinelog'

14> set db_create_online_log_dest_2='/backups/oracle/stdby/fast_recovery_area/stdby/onlinelog'

15> set db_recovery_file_dest='/backups/oracle/stdby/fast_recovery_area/stdby'

16> set DB_RECOVERY_FILE_DEST_SIZE='4G'

17> nofilenamecheck;

18> }

using target database control file instead of recovery catalog

allocated channel: c1

channel c1: SID=19 device type=DISK

allocated channel: c2

channel c2: SID=133 device type=DISK

allocated channel: c3

channel c3: SID=20 device type=DISK

allocated channel: stby1

channel stby1: SID=171 device type=DISK

Starting Duplicate Db at 20-MAR-2014 15:50:55

contents of Memory Script:

{

backup as copy reuse

targetfile '/u01/oracle/DB11G/product/11.2.0/dbhome_1/dbs/orapwbrij' auxiliary format

'/backups/oracle/stdby/product/11.2.0/dbhome_2/dbs/orapwstdby' targetfile

'/u01/oracle/DB11G/product/11.2.0/dbhome_1/dbs/spfilebrij.ora' auxiliary format

'/backups/oracle/stdby/product/11.2.0/dbhome_2/dbs/spfilestdby.ora' ;

sql clone "alter system set spfile= ''/backups/oracle/stdby/product/11.2.0/dbhome_2/dbs/spfilestdby.ora''";

}

executing Memory Script

Starting backup at 20-MAR-2014 15:50:55

Finished backup at 20-MAR-2014 15:50:56

sql statement: alter system set spfile= ''/backups/oracle/stdby/product/11.2.0/dbhome_2/dbs/spfilestdby.ora''

contents of Memory Script:

{

sql clone "alter system set dispatchers =

''(PROTOCOL=TCP) (SERVICE=stdbyXDB)'' comment=

'''' scope=spfile";

sql clone "alter system set db_unique_name =

''stdby'' comment=

'''' scope=spfile";

sql clone "alter system set control_files =

''/backups/oracle/stdby/oradata/control.ctl'' comment=

'''' scope=spfile";

sql clone "alter system set db_create_file_dest =

''/backups/oracle/stdby/oradata'' comment=

'''' scope=spfile";

sql clone "alter system set audit_file_dest =

''/backups/oracle/stdby/admin/stdby/adump'' comment=

'''' scope=spfile";

sql clone "alter system set db_create_online_log_dest_1 =

''/backups/oracle/stdby/fast_recovery_area/stdby/onlinelog'' comment=

'''' scope=spfile";

sql clone "alter system set db_create_online_log_dest_2 =

''/backups/oracle/stdby/fast_recovery_area/stdby/onlinelog'' comment=

'''' scope=spfile";

sql clone "alter system set db_recovery_file_dest =

''/backups/oracle/stdby/fast_recovery_area/stdby'' comment=

'''' scope=spfile";

sql clone "alter system set DB_RECOVERY_FILE_DEST_SIZE =

4G comment=

'''' scope=spfile";

shutdown clone immediate;

startup clone nomount;

}

executing Memory Script

sql statement: alter system set dispatchers = ''(PROTOCOL=TCP) (SERVICE=stdbyXDB)'' comment= '''' scope=spfile

sql statement: alter system set db_unique_name = ''stdby'' comment= '''' scope=spfile

sql statement: alter system set control_files = ''/backups/oracle/stdby/oradata/control.ctl'' comment= '''' scope=spfile

sql statement: alter system set db_create_file_dest = ''/backups/oracle/stdby/oradata'' comment= '''' scope=spfile

sql statement: alter system set audit_file_dest = ''/backups/oracle/stdby/admin/stdby/adump'' comment= '''' scope=spfile

sql statement: alter system set db_create_online_log_dest_1 = ''/backups/oracle/stdby/fast_recovery_area/stdby/onlinelog'' comment= '''' scope=spfile

sql statement: alter system set db_create_online_log_dest_2 = ''/backups/oracle/stdby/fast_recovery_area/stdby/onlinelog'' comment= '''' scope=spfile

sql statement: alter system set db_recovery_file_dest = ''/backups/oracle/stdby/fast_recovery_area/stdby'' comment= '''' scope=spfile

sql statement: alter system set DB_RECOVERY_FILE_DEST_SIZE = 4G comment= '''' scope=spfile

Oracle instance shut down

connected to auxiliary database (not started)

Oracle instance started

Total System Global Area 1068937216 bytes

Fixed Size 2260088 bytes

Variable Size 671089544 bytes

Database Buffers 390070272 bytes

Redo Buffers 5517312 bytes

allocated channel: stby1

channel stby1: SID=133 device type=DISK

contents of Memory Script:

{

backup as copy current controlfile for standby auxiliary format '/backups/oracle/stdby/oradata/control.ctl';

}

executing Memory Script

Starting backup at 20-MAR-2014 15:51:06

channel c1: starting datafile copy

copying standby control file

output file name=/u01/oracle/DB11G/product/11.2.0/dbhome_1/dbs/snapcf_brij.f tag=TAG20140320T155106 RECID=29 STAMP=842716268

channel c1: datafile copy complete, elapsed time: 00:00:03

Finished backup at 20-MAR-2014 15:51:09

contents of Memory Script:

{

sql clone 'alter database mount standby database';

}

executing Memory Script

sql statement: alter database mount standby database

contents of Memory Script:

{

set newname for clone tempfile 1 to new;

switch clone tempfile all;

set newname for clone datafile 1 to new;

set newname for clone datafile 2 to new;

set newname for clone datafile 3 to new;

set newname for clone datafile 4 to new;

set newname for clone datafile 5 to new;

set newname for clone datafile 6 to new;

set newname for clone datafile 7 to new;

set newname for clone datafile 8 to new;

backup as copy reuse

datafile 1 auxiliary format new

datafile 2 auxiliary format new

datafile 3 auxiliary format new

datafile 4 auxiliary format new

datafile 5 auxiliary format new

datafile 6 auxiliary format new

datafile 7 auxiliary format new

datafile 8 auxiliary format new

;

sql 'alter system archive log current';

}

executing Memory Script

executing command: SET NEWNAME

renamed tempfile 1 to /backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_temp_%u_.tmp in control file

executing command: SET NEWNAME

executing command: SET NEWNAME

executing command: SET NEWNAME

executing command: SET NEWNAME

executing command: SET NEWNAME

executing command: SET NEWNAME

executing command: SET NEWNAME

executing command: SET NEWNAME

Starting backup at 20-MAR-2014 15:51:16

channel c1: starting datafile copy

input datafile file number=00001 name=/u01/oracle/DB11G/oradata/brij/system01.dbf

channel c2: starting datafile copy

input datafile file number=00002 name=/u01/oracle/DB11G/oradata/brij/sysaux01.dbf

channel c3: starting datafile copy

input datafile file number=00007 name=/u01/oracle/DB11G/oradata/brij/system02.dbf

output file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_system_54p3ll3l_.dbf tag=TAG20140320T155116

channel c3: datafile copy complete, elapsed time: 00:02:52

channel c3: starting datafile copy

input datafile file number=00005 name=/u01/oracle/DB11G/oradata/brij/example01.dbf

output file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_sysaux_53p3ll3l_.dbf tag=TAG20140320T155116

channel c2: datafile copy complete, elapsed time: 00:02:53

channel c2: starting datafile copy

input datafile file number=00008 name=/u01/oracle/DB11G/oradata/brij/undotbs02.dbf

output file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_system_52p3ll3l_.dbf tag=TAG20140320T155116

channel c1: datafile copy complete, elapsed time: 00:03:39

channel c1: starting datafile copy

input datafile file number=00003 name=/u01/oracle/DB11G/oradata/brij/undotbs01.dbf

output file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_undotbs1_57p3llak_.dbf tag=TAG20140320T155116

channel c1: datafile copy complete, elapsed time: 00:00:25

channel c1: starting datafile copy

input datafile file number=00006 name=/u01/oracle/DB11G/oradata/brij/users02.dbf

output file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_undotbs1_56p3ll93_.dbf tag=TAG20140320T155116

channel c2: datafile copy complete, elapsed time: 00:01:47

channel c2: starting datafile copy

input datafile file number=00004 name=/u01/oracle/DB11G/oradata/brij/users01.dbf

output file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_example_55p3ll92_.dbf tag=TAG20140320T155116

channel c3: datafile copy complete, elapsed time: 00:01:55

output file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_users_58p3llbk_.dbf tag=TAG20140320T155116

channel c1: datafile copy complete, elapsed time: 00:00:33

output file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_users_59p3llch_.dbf tag=TAG20140320T155116

channel c2: datafile copy complete, elapsed time: 00:00:03

Finished backup at 20-MAR-2014 15:56:06

sql statement: alter system archive log current

contents of Memory Script:

{

switch clone datafile all;

}

executing Memory Script

datafile 1 switched to datafile copy

input datafile copy RECID=29 STAMP=842716568 file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_system_52p3ll3l_.dbf

datafile 2 switched to datafile copy

input datafile copy RECID=30 STAMP=842716568 file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_sysaux_53p3ll3l_.dbf

datafile 3 switched to datafile copy

input datafile copy RECID=31 STAMP=842716568 file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_undotbs1_57p3llak_.dbf

datafile 4 switched to datafile copy

input datafile copy RECID=32 STAMP=842716568 file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_users_59p3llch_.dbf

datafile 5 switched to datafile copy

input datafile copy RECID=33 STAMP=842716568 file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_example_55p3ll92_.dbf

datafile 6 switched to datafile copy

input datafile copy RECID=34 STAMP=842716568 file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_users_58p3llbk_.dbf

datafile 7 switched to datafile copy

input datafile copy RECID=35 STAMP=842716568 file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_system_54p3ll3l_.dbf

datafile 8 switched to datafile copy

input datafile copy RECID=36 STAMP=842716569 file name=/backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_undotbs1_56p3ll93_.dbf

Finished Duplicate Db at 20-MAR-2014 15:56:29

released channel: c1

released channel: c2

released channel: c3

released channel: stby1

RMAN> **end-of-file**

Our standby database is created now. It is a fully functioning physical standby database that is ready to receive redo.

5.5 MODIFY THE REQUIRED INIT PARAMETERS

IN STANDBY DATABASE

IN PRIMARY DATABASE

SYS@brij > ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(brij,stdby)'; System altered. SYS@brij > ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='service=stdby ASYNC DB_UNIQUE_NAME=stdby VALID_FOR=(primary_role,online_logfile)'; System altered. SYS@brij > ALTER SYSTEM SWITCH LOGFILE; System altered. SYS@brij > ALTER SYSTEM SET FAL_SERVER=stdby; System altered. SYS@brij > ALTER SYSTEM SET FAL_CLIENT=brij; System altered. SYS@brij > ALTER SYSTEM SET STANDBY_FILE_MANAGEMENT=AUTO; System altered.

Your physical standby database creation is complete now.

5.6 VERIFY

Check the standby database:

SQL> SELECT DATABASE_ROLE ROLE, NAME, DB_UNIQUE_NAME INSTANCE, OPEN_MODE MODE, PROTECTION_MODE P_MODE, PROTECTION_LEVEL P_LEVEL, SWITCHOVER_STATUS FROM V$DATABASE; ROLE NAME INSTANCE MODE P_MODE P_LEVEL SWITCHOVER_STATUS ---------------- --------- ------------ --------- -------------------- -------------------- PHYSICAL STANDBY BRIJ stdby MOUNTED MAXIMUM PERFORMANCE MAXIMUM PERFORMANCE TO PRIMARY NOTE: Remember that NAME (db_name) will remain same for both primary and physical standby but DB_UNIQUE_NAME will be different.

SQL> select name from v$datafile; NAME ------------------------------------------------------------------------------------ /backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_system_4op30rp8_.dbf /backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_sysaux_4pp30rp8_.dbf /backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_undotbs1_4tp30rv8_.dbf /backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_users_4vp30s0e_.dbf /backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_example_4rp30rtp_.dbf /backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_users_4up30s06_.dbf /backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_system_4qp30rp8_.dbf /backups/oracle/stdby/oradata/STDBY/datafile/o1_mf_undotbs1_4sp30rue_.dbf 8 rows selected. SQL> select type, member from v$logfile; TYPE MEMBER ------- ---------------------------------------------------------------------------------------------------- ONLINE /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_4_9l235hby_.log ONLINE /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_5_9l235xp6_.log ONLINE /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_5_9l235y1z_.log ONLINE /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_4_9l235hhv_.log ONLINE /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_6_9l236cm5_.log ONLINE /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_6_9l236ctk_.log STANDBY /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_1_9l234x3d_.log STANDBY /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_2_9l2352p4_.log STANDBY /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_3_9l23594v_.log STANDBY /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_7_9l236s5b_.log STANDBY /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_1_9l234x8q_.log STANDBY /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_2_9l2352rr_.log STANDBY /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_3_9l235980_.log STANDBY /backups/oracle/stdby/fast_recovery_area/stdby/onlinelog/STDBY/onlinelog/o1_mf_7_9l236s9w_.log 14 rows selected.

Also to test the log shipment, execute the following command in primary database

SYS@stdby> select thread#,sequence#,applied from v$archived_log; THREAD# SEQUENCE# APPLIED ---------- ---------- --------- 1 29 YES 1 30 YES 1 31 YES 1 33 NO 1 32 NO

- Oracle Multitenant DB 4 : Parameters/SGA/PGA management in CDB-PDB - July 18, 2020

- Details of running concurrent requests in Oracle EBS - May 26, 2020

- Oracle Multitenant DB 3 : Data Dictionary Architecture in CDB-PDB - March 20, 2020

This is excellent post. Well organized document and easy to follow through. Thanks

excellent stuff

good one

Hi Brij,

I am thinking mentioning of db_file_name_covert and log_file_name_convert in the rman duplication is not there.. probably missed out.. otherwise really good stuff

Yes, you are right. I used DB_CREATE_FILE_DEST initialization parameter instead of db_file_name_covert. “db_file_name_covert parameter” has precedence over DB_CREATE_FILE_DEST initialization parameter. I mentioned that I will use db_file_name_covert parameter but later on chose DB_CREATE_FILE_DEST instead. You can use any one based on your needs and file system availability on the standby side.

Hi Brij,

Well that was a real quick response.. Appreciate it… guess then db_create_file_dest is not specific to only ASM… it can be used in normal RMAN duplication as well.